Watching the Tokyo 2020 Olympics in VR

The Tokyo Olympics were “broadcast” in VR. What was it like? What was good – and even great! – and what could be improved?

(Update April ’22: there is now also an insider review of Beijing 2022 in VR)

Watching the Tokyo 2020 Olympics in VR – an Insider Review

Past Olympic events have been distributed in VR, to mixed reviews. The Tokyo “2020” Olympics were again distributed in VR180 / 360, with surprisingly little publicity.

And that’s a crying shame. The VR production was absolutely amazing. It deserved the widest possible audience.

And while I developed some strong ideas on how VR180/360 should be produced and delivered over the past few years, I was in for a few surprises myself.

Tiledmedia supplied the client-side audiovisual playback technology for the NBC Olympics VR by Xfinity app, which was created by Cosm. I’ve been one of the fortunate few in Europe to be able to see many of the 47 events that were distributed live, in VR. I had to stay up late and get up early, but it was worth it. So let me share a few of my thoughts.

A good video of the experience is here. My thoughts below pertain to the headset experience. It’s the most immersive experience and the most difficult to get right. But if done well, it’s definitely where sports fans will spend their viewing hours.

Production is Everything

Again, the production was of superb quality. Six camera positions were available to users: 5 stereoscopic VR180 ones and one monoscopic VR360 camera. Users were able to select these cameras from a small map representing the physical space. This allowed them, for instance, to choose between the High Jump and the Javelin Throw in Track & Field.

In Gymnastics, there were cameras near all apparatuses. In Basketball, viewers could choose cameras mounted behind the baskets, in the stands, or at the sideline. Ditto for Boxing and Beach Volleyball.

Augmenting the VR cameras, there were virtual “jumbotrons”- large virtual TV screens that floated in an appropriate location in the scene. These Jumbotrons carried the TV broadcast with commentary. A very nice touch was the way these screens were made larger and brought closer to the user for key replays, and then “flew” back again. This was especially appealing in 3D – more on that below.

Additionally, and arguably the best way to follow the action, there was the so-called “VR Cast”. This is a “director cut” that switches between the 180 feeds, following the main action. It’s a great feature and the Jumbotron also provides continuity across the director switches. Audio is seamless as well as it is associated with the Jumbotron.

But if you wanted to be your own director, that was entirely possible, and also that provided a seamless and continuous Jumbotron feed with the commentary and ambient audio.

So, what did I learn?

Monoscopic or Stereoscopic?

I was surprised to see how compelling I found the stereoscopic effect. The app allowed switching between monoscopic (“2D”) and stereoscopic (“3D”), so it was easy to compare. I used to think that stereo added very little extra value, and that it wasn’t worth the inevitable resolution sacrifice. (Since you need to stuff a left eye video and a right eye video into a 4K decoder instead of just one single video for both eyes, the horizontal resolution is cut in half.)

The resolution downgrade was clearly there and caused some interesting artefacts, but “3D” definitely added to the experience.

Getting close to the action is paramount. Take track and field: the high jump and the pole vault were amazing. Running was nice too, but different; I still felt like I was present, but it was harder to really follow the action. That said, I had never experienced the sheer speed of the runners in the 100 meter sprint on TV as I did in VR. Being there virtually, even high in the stands and far away, made that speed apparent like no TV program ever has.

Again, the camera positions were really good, for gymnastics, track & field, boxing, beach volleyball and also basketball.

Stereo and proximity: a difficult trade-off

There’s an interesting and difficult trade-off in proximity and the use of stereoscopic video. Doing a production in 3D creates a challenge for graphics and any virtual environment, and that challenge becomes harder as objects are closer. Close objects may be a rope in boxing, the tartan for a camera close to the ground in track and field, the basket for a camera mounted behind it, and the net for volleyball.

These close objects make getting the stereo right across all elements (video, environment, graphics inserts) very hard. And I would argue that even the 3D video alone is already challenging enough to get right with close objects. In addition (and even without close objects) the alignment of graphics elements – including their animation – requires careful consideration when working in 3D. At the Olympics, this was handled with care.

Unfortunately, the horizontal downsampling visibly reduced the quality, in two ways. The obvious reason is that throwing out 50% of the pixels per eye reduces resolution. But there is another element that plays a role: there is a distracting interaction between the two eyes when one eye gets only the even pixels and the other only the odd ones. Perhaps a more sophisticated downsample might have reduced the effect.

Framerate is another consideration. The Olympics app allowed switching between 60 and 72 Hz screen refresh rates. For video smoothness, 60 fps rendering looked best as it matches the production framerate. Unfortunately, there is visible flicker at 60 Hz screen refresh rate. At 72 fps, even though it’s only a small step up, the display is much easier on the eye. It reduces flicker significantly. But there is a downside: 72 fps makes the video less smooth. I found myself flipping back and forth, depending on the type of sport.

On another note, accurate cross-camera sync is very important when doing stereoscopic video with two camera bodies.

180 or 360?

Then there is 360 versus 180. Generally, 180 provides enough of a viewing angle to feel immersed, especially with an appropriate, spacious virtual environment that shows as much as possible of the 180 video and doesn’t block anything off.

But there was also one VR360 camera at all events. It was fun to be able to look around. The 360 camera was not part of the VR Cast (the “director cut”) and it was only available in mono, which made the camera a bit of an odd one out. It was also an outlier for another reason: the resolution.

The 180 cameras used fisheye lenses which give unequal resolution across the hemisphere. There is very decent pixel density (i.e., resolution, sharpness) where it matters most: in the center of the sensor. The number of pixels per degree tapers off towards the edges, where the “worst”, lowest resolution parts of the image are blocked off by the virtual environment.

That’s different for a VR360 feed. It was also distributed in 4K, with pixels spread out across the entire sphere. A lot of encoded pixels are wasted on the zenith and the nadir, which get expanded in the EquiRectangular Projection (ERP) that was employed. That’s the default for VR360, but it gives most resolution to the parts of the video that are the least watched. There are alternatives, like a a cubemap projection, but such an alternative wasn’t used this time.

In other words, the 360 was nice but lacked in resolution as compared to the 180 cameras. (As a bit of a shameless plug, this is what ClearVR solves – see below)

4K 180 equals 8K 360?

And speaking of resolution and 180 vs 360: here’s an interesting observation. It’s a bit hard to explain, but let me try anyway. The resolution in the center of the view is about the same for VR180 at 4K with a fisheye lens and VR 360 at 8K.

That sounds logical: 360 is twice 180 and 8K is twice 4K, right? Not really. 8K video (8192 x 4096) holds four times the number of pixels of 4K (4096 x 2048) monoscopic VR, so 8K should still have significantly higher resolution. But with fisheye lenses, the resolution is not evenly distributed: it is higher in the center of the view than it is at the edges. A VR360 video in cubemap projection has a much more uniform pixel distribution over the entire sphere. And that’s why the resolution (pixels-per-degree) in the center of a 4K fisheye projection is roughly the same as it is for an 8K video using a cubemap projection.

With ClearVR, the bandwidth requirements for 4K Fisheye 180 and 8K 360 are also comparable: 10 – 15 Mbit/s for a typical sports event.

How ClearVR enabled the experience – and could improve it even further

Since this is a Tiledmedia blog I need to make a few observations on the use of ClearVR 🙂

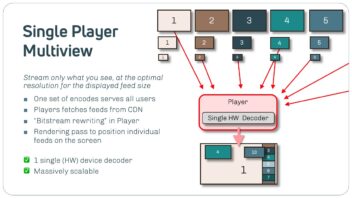

Again, I had the privilege to watch this in VR because Tiledmedia was part of the team delivering the experience. Our ClearVR SDK was used in the Xfinity Oculus VR app as the audiovisual player. The ClearVR client-side SDK was selected because it supports playback for all forms of VR: 180 and 360, 2D and 3D, tiled and “classic”, and also rectangular video. And ClearVR supports this across headsets and mobile devices. Another important reason: ClearVR provided the player seamless, synchronised, immediate camera switching – a crucial feature when users have seven feeds to choose from.

I need to point out that the distribution was NOT in our advanced tiled delivery. Distribution was in “legacy” HLS format.

Working with Cosm, we created custom-calibrated projection models for the camera/lens combination used at the Olympic Games. If you are interested: a Red Komodo 6K used with 4K output and a Canon 8-15 mm f4 fisheye used at the widest, 8mm focal length.

For future, similar events, I hope we can upgrade from HLS, and use the full power of ClearVR and its viewport-adaptive streaming. That will enable streaming 360 video at full 8K resolution with a cubemap projection. It also allows doing 180 video 4K stereoscopic at full 4K resolution, per eye, without having to throw away half of the pixels.

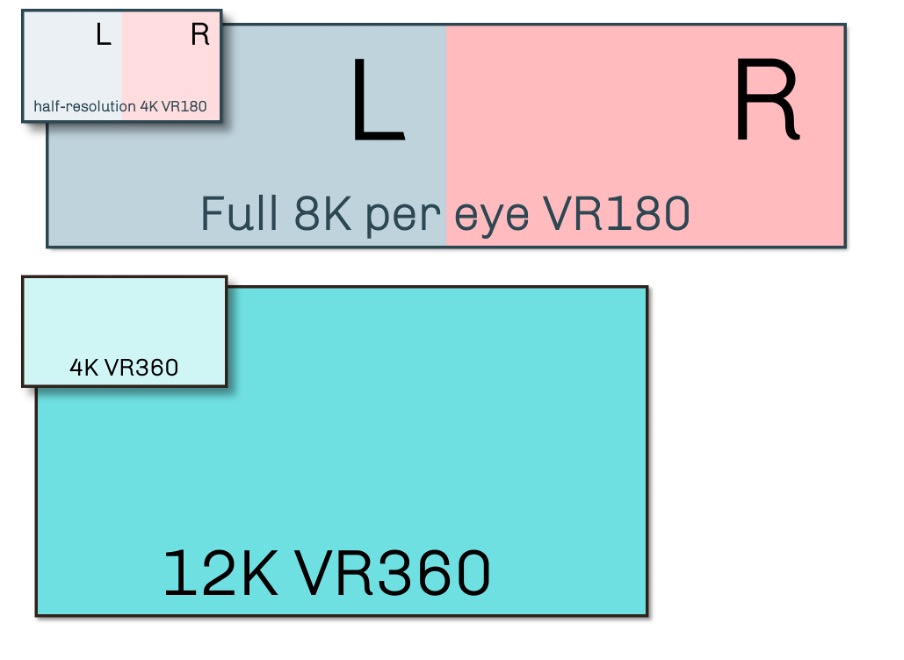

Or possibly higher still? The introduction of 8K and even 12K cameras offers tantalizing possibilities. Certainly when used in conjunction with headsets like the Quest 2 and the Pico Neo 3, that have 8K decoders. When ClearVR is used as the distribution format we can go to, say, 8K stereoscopic VR180, or VR360 at 12K. That’s a huge improvement over downsampled 4K VR 180 and 4K VR360 respectively.

The picture shows, in a simple graphic representation, how much of a resolution improvement this would bring. Needless to say that such an increase will make the video quality even more realistic, and the experience even better!

(Update, April 2022: If you want to understand if any of these suggestions were used for Beijing 2022, please check out the insider review of the VR coverage at the Beijing Games)

Get in touch

Got questions or comments? Want to do your own live service? Please drop me an email: rob at tiledmedia dot com.

Oh, and there’s a bit of a discussion about this post on LinkedIn

August 31, 2021

Tech

Blogs

Author

Rob Koenen

Stay tuned!