Watching the Games in VR - The Sequel

After watching a lot of Tokyo ’20 in VR, I got to do the same for Beijing ’22. Was it any different? Notably, was it any better? And could it be better still? Read on to find out.

4 April ’22 – Watching the Games in VR – the Sequel

In August of last year, I wrote an extensive review of the Tokyo 2020 VR Experience. This February, I again found myself immersed in VR, watching many of the Beijing ’22 events. The motivation was the same as for Tokyo: Tiledmedia provided the technology that powered the Olympics VR by Xfinity Meta Quest app in the US, as well as the Yangshipin VR Pico app in China.

Unlike last time, we also did the transcoding – advanced, high-quality, tiled transcoding. (In Tokyo we only provided the client-side SDK and the transcoding was done by a third party).

The project was a collaboration between Intel, NBC Universal, Yangshipin, Cosm, Twizted Design, Tebi, Tiledmedia, EZDRM, and more.

Here is an update of the Tokyo review, focusing on the live experience, in a VR headset.

What was the same?

Like in Tokyo, the VR production covered a variety of live sports as well as special features. The live programming included the opening and closing ceremonies, figure skating, curling, freestyle skiing, snowboarding, short track skating and ice hockey. The special features covered virtually all sports in 360 from the most amazing vantage points, like close to the ice on a skeleton sled and flying through the air with a ski jumper.

The production for Beijing was largely the same as in Tokyo. There were still five VR180 cameras and a single VR360 one. There was still a director that provided a directed cut between the VR180 cameras. Like in Tokyo, there were graphics and a virtual Jumbotron that provided the TV feed with replays and commentary. The Jumbotron was again animated, flying to center view for important replays and moving back to a more modest position during live action. Also the environment – a virtual suite – was akin to the one for Tokyo, obviously updated to match the Beijing setting.

So with all these things being the same, was there any notable difference?

Well, absolutely.

A few crucial choices made all the difference, creating a totally new experience.

Total Focus on Quality

The production was already excellent in Tokyo, with great camera positions and very nice animated graphics. If anything, it was only better in Beijing. The Cosm and Twizted crews are constantly learning, and it shows. Also, the larger production is getting familiar with the presence of VR cameras and they understand the positions required for the best experience in a headset. VR production is definitely maturing.

The major difference was the dedicated focus on quality. Not just the quality of the production, but also, and importantly, the visual quality in the headset.

The experience was simpler than for Tokyo in a number of ways. First, there was no user-controlled camera selection between the five 180 cameras this time around. There were only the 180 director cut and the 360 camera. Another change from Tokyo: there was no choice between monoscopic and stereoscopic for the VR180 feed. The VR180s capture all content as a monoscopic video. This was for a good reason that I will discuss in a bit. (The VR360 was already monoscopic in Tokyo.)

Surely Ice Hockey Doesn’t Work in VR – Or Does It?

And now we get to the main difference: the resolution was MUCH higher than in Tokyo. Both the VR180 and the VR360 captured the events in 8k. This means 7 680 x 3 840 for the VR360 feed and 7 680 x 4 320 for the VR180 signal. And if you ask me, it made all the difference. Going from having 50% of 4K per eye (for stereoscopic content) in Tokyo to a full 8k source per eye in Beijing was a huge step up.

That’s a whopping 8-fold increase in pixel count. If you ever watched an event in Oculus’ Meta’s Horizon Venues: this is also about 8 times the resolution of sports events streamed in Venues.

And boy did it show.

I’ve done and seen a lot of video VR productions, but for the first time, I felt like the video quality was no longer an issue. I’d always believed that ice hockey was impossible in VR.

Well, I was wrong. With the 8k resolution and the 60 fps, and with camera positions in the stands, in the corners and right behind the goals, this was at least as good as watching TV. We don’t get to see much televised ice hockey in the Netherlands, so true hockey fans should be the final judge, but I found it easier to follow than on TV, definitely seeing more of the puck.

Sheer Speed and Height

“Better than TV” was true for other sports as well. I see a lot of short track skating on TV, but I never fully appreciated the sheer speed of the athletes and the crazy angles at which they round the corners. Watching in VR opened my eyes. On TV, the camera tracks the skaters. In VR, you track them, in your headset. It makes all the difference.

And take Big Air (Snowboard and Skiing): only in VR did I really see the insane height of these athletes’ jumps.

On those quality trade-offs, I didn’t miss the option to choose my own camera standpoint very much. The Tokyo games had more events that required user choice, like Track and Field and Gymnastics, with many things happening in parallel. For the Beijing events broadcast by VR, the action was always in one single spot.

The experience is generally lean-back, although it does entail actively looking around. With some sports (e.g., the Moguls), you needed to follow the action top-right to bottom-left and then quickly turn your head back as the director switched to the next camera. But the director was skilled at making the switches predictable across runs, and only used camera switches when they added to the experience. It would have been hard and tiring for viewers to make all these switches themselves.

I didn’t miss the stereoscopic view either. Perhaps when stereoscopic production matures, e.g. with the new Canon Dual Fisheye lens, it’ll be worth sacrificing per-eye resolution for a stereoscopic view. But IMHO, current workflows (with two separate 180 bodies, one for each eye, or with the current generation of 360 cameras providing stereo output) do not warrant sacrificing that extra visual crispness and detail.

Best of Both Worlds: TV and Immersion

The virtual Jumbotron was still a great feature, including the way it was animated. You basically get the best of both worlds: the feeling of presence combined with the skills of the TV director and the professional announcers.

The virtual environment (the “suite”) was very nicely done, with local elements all over the virtual place. The first time I entered I looked around quite a bit, but when I settled in, I basically focused on the video exclusively. I almost forgot I was in this environment, except when it blocked my view of the left and right ends of the video, and sometimes also below. I developed this habit of leaning back, re-centering the Quest’s position, and the leaning forward again. That give me just that extra bit of view. But that shouldn’t be necessary, and I believe the environment could have had a bit of a larger opening to reveal more of the VR180 video.

360 or 180?

The VR360 was nice for some sports, e.g. the kiss-and-cry cam with figure skating. But I found myself switching back to the 180 feed fairly quickly. It had better camera positions, director-controlled switching, and there was a clear difference between the 360 and the 180’s in visual quality.

And that makes sense: both 360 and 180 use the same amount of pixels, but the part of the sphere that the 180’s capture is less than half that of the 360 camera, so the pixel density is a lot higher. (Yes, less than half, because while it may be 180 horizontally, it’s not fully 180 vertically.) Also, the 180’s used professional 8K broadcast bodies with matching fisheye lenses, while the 360 optimized for size: smaller devices, with smaller sensors and lenses. Again, these things show. The opening and closing ceremonies used a larger 360 camera (KanDao Obsidian Pro, a very nice camera) and that visibly improved the quality of the 360 feed.

The VR360 feed obviously provided a wider view, and for some events it would have been helpful to have a wider viewing angle than provided by the combination VR180 feed+ virtual suite. But unless and until the resolution of the VR360 matches that of the VR180, I will definitely prefer the VR180. (Matching the 180 resolution would require the VR360 ERP resolution to be some 11 – 12k; see below.)

Tiling Gets the Full Quality to Viewers – Worldwide

As this is the sequel to the Tokyo review, I’d like to round it out with an update of the end-to-end delivery chain. If you read the original blog, you will remember that I explained how the quality could be improved very significantly.

Well, that is exactly how it was done.

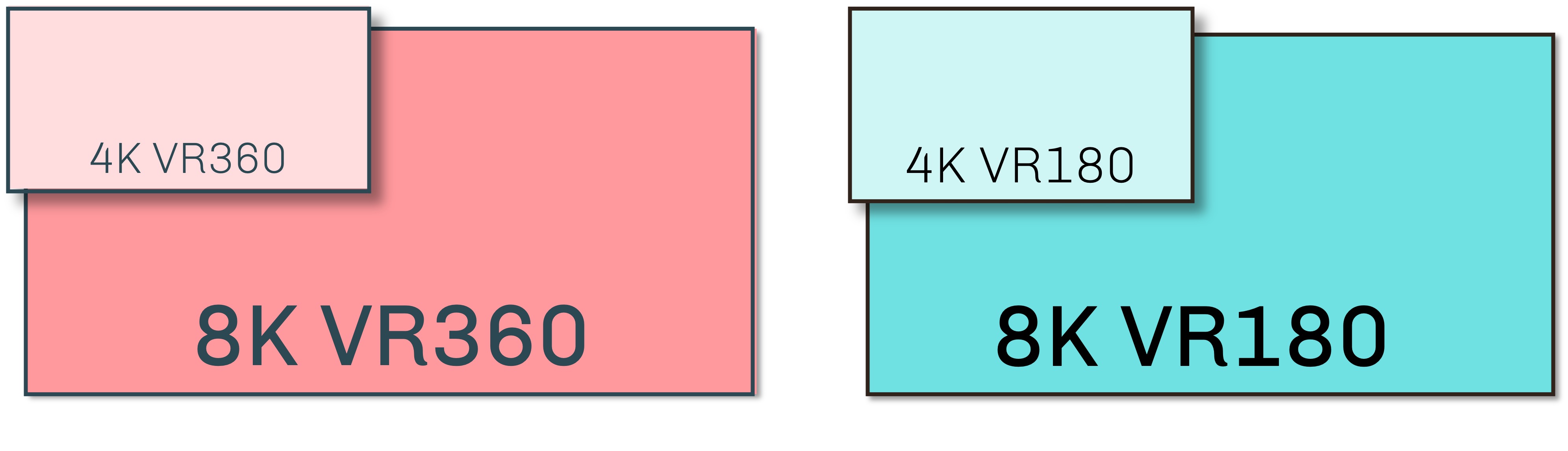

The distribution from Beijing was tiled. This allowed the distribution of 8k VR360 and the even more spectacular 8k VR180. The graphics below give an impression of the difference between a 4k and an 8k distribution, for both 180 and 360. The surface is an indication for the number of pixels the cameras record. If you look carefully you will see that there are slightly different aspect ratios for the 180 and the 360. That’s because the 180 feeds are captured with 16 x 9 broadcast sensors, while and the 360 feed is a stitched 2 x 1 ERP.

Streaming at 12K ERP Quality

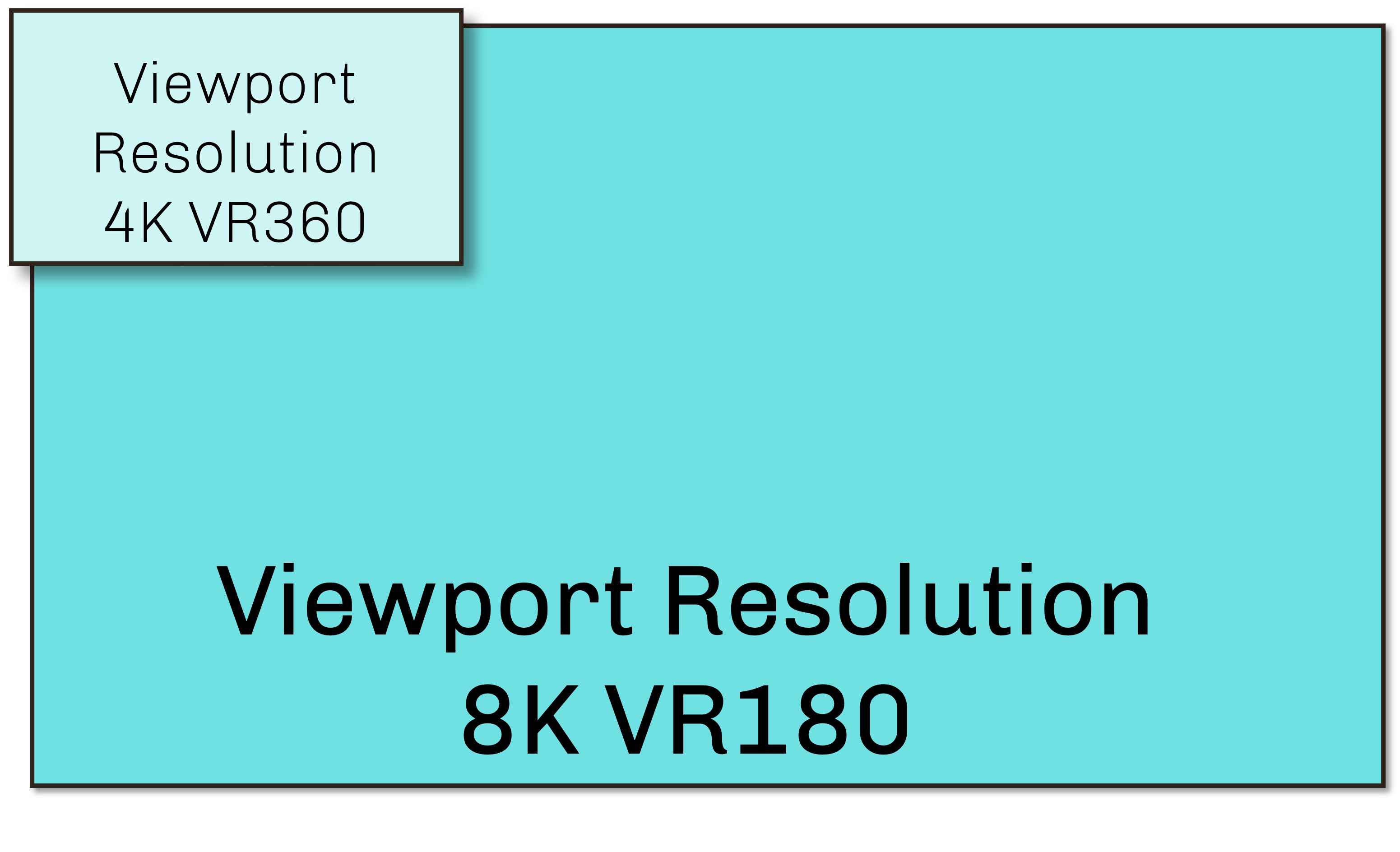

To fully appreciate the resolution of an 8k VR180 video stream, the graphic below compares the amount of pixels in the viewport (what the user actually sees) between 8k VR180 and 4k VR360. The “ERP-equivalent” resolution for the VR180 video in the distribution was about 12k.

This was a world-first.

Never before had VR video been streamed at this high quality and pixel density. Not on-demand, certainly not live, let alone worldwide.

Some folks will argue that the 12k-equivalent ERP resolution is higher than the display of the Quest 2 can handle. And they have a point: the resolution in the Quest 2 is about 8k pixels for a 360 circle on the equator. In practice, though, the extra resolution is clearly visible, as everyone who watched the stream confirmed.

There is a solid technical explanation for this. The chain from camera to headset uses quite a few mapping and projection steps. From the fisheye capture by the camera, to a cubemap representation in the distribution path, and then the projection onto the viewport in the headset display. Every step entails a mapping of one pixel grid onto another, with imperfect interpolation, losses and rounding errors. The extra resolution counters these losses and rounding errors, protecting the sharpness and detail of the captured video.

That said, we were probably outresolving the Quest’s and Pico’s displays at the highest ABR level. The step up from Medium to High ABR level was visible, but small.

Adapting the Bitrates

Speaking of Adaptive Bitrate (ABR) levels: if you are still reading, there are few more details you may also find interesting.

Even with Tiled Streaming, more pixels in the viewport inevitably means more bandwidth. The super-high 12k resolution required about 25 Mbit/s on average, and with modern internet and WiFi, this is usually no problem. But not everyone has that kind of access bandwidth, so the content was distributed in three different tiled resolutions. Automatic switching ensured all users got the best possible quality.

Yep, this is classical “adaptive bitrate streaming”, a world-first for tiled streaming. (We also produced adaptive bitrate non-tiled HLS feeds for web use, four layers to be precise. They were distributed in in China, and had millions of views.)

Another tidbit is the replication of the VR feeds to local CDN origins across the globe. Part of Tiledmedia’s assignment was ensuring the streams were available worldwide. We did this through our partner Tebi. The total bitrate for all representations of all tiles in all bitrate versions was 1.4 Gbit/s. We did the transcoding in Tokyo, producing packaged tiles and manifest files. From there, Tebi replicated the transcoded feeds, lighting-fast, to origin servers in China, US West, US East, and Europe. (With tiled streaming, access times are way more critical than with ordinary streaming. Round-trip delays in tile requests will show as low resolution patches in the video.)

Interestingly, to get the bitrate for the user as low as possible, we need to produce quite a few versions of all the tiles. In other words: to lower the user bitrate we increase the bitrate into the origin.

Maybe You Can Check it Out Yourself?

If you want to check it out for yourself, you may be in luck. Those living in the USA and China still have access to all Beijing events that were captured. In the US, install the Olympics VR by Xfinity app on your Quest. Those in China with a Pico headset can get the Yangshipin VR app. There are full replays, highlights, and amazing 360 feature clips that cover all sports, also those not broadcast live in VR.

Are We There Yet?

While the Beijing Games looked fantastic, we are not nearly there yet. This is certainly not the end of innovation in VR quality. Headsets with higher resolution displays will appear, hopefully soon, and I can’t wait to try some of the Beijing content on them. It should look even more gorgeous. As I noted above, the VR180 content had more resolution than the display needed.

VR360/180 cameras keep improving as well: for instance, check out the Meta Camera 3. It’s not yet on Meta Camera’s official website, but a sneak peak is here. (Note: despite its name, Meta Camera are not affiliated with Meta, the Facebook parent – Meta Camera got there first 🙂 )

Needless to say that Tiledmedia is ready when these new toys hit the market. Bring it on!

Got questions or comments? Want to do your own live service? Please drop me an email: rob at tiledmedia dot com.

March 31, 2022

Tech

Blogs

Author

Rob Koenen

Stay tuned!