Why Single-Player Multiview is Superior

As streaming services evolve, viewer demand for new and innovative ways to consume content is making multiview an essential feature. Today’s audiences expect experiences that are engaging, interactive, and mobile-friendly. OTT service providers must adapt by empowering fans to follow their favorite athletes, games, and programs. Users should be able to personalize their viewing experience alongside the curated director’s feed. But delivering a high-quality multiview experience is a complex challenge.

In this blog, I’ll explore three primary approaches to implementing multiview, analyzing their pros and cons: “Many Encodes”, “Many Players”, and “Single Player Multiview”.

TL;DR

Of these three methods, Single Player Multiview has an unfair advantage when it comes to streaming efficiency, switching speed, ABR management, stream synchronization, and more. It combines the best user experience with the highest distribution efficiency. With full knowledge of everything that is happening on the screen combined in a single player, that player can adapt its ABR decisions and segment retrieval strategy accordingly. Single Player Multiview is also the only approach that can ensure reliable sync between multiple feeds.

Three Approaches to Tackling Multiview

There are many ways to tackle multiview. I will describe the three basic approaches that are used in the market today, and I will try to explain why I believe that our approach (Single Player Multiview) is the best. It is the only method that is multi-stream at heart, instead of bolting multiview onto single-stream solutions. While variations exist, all multiview deployments are a form of these three basic approaches:

- Many Encodes

- Many Players

- Single Player Multiview

There’s yet another approach, in which each viewer has a dedicated edge/cloud transcoder, similar to what’s used for cloud gaming. This is so costly to deploy at scale that I won’t discuss it further.

Let’s dive into each approach in a bit more detail.

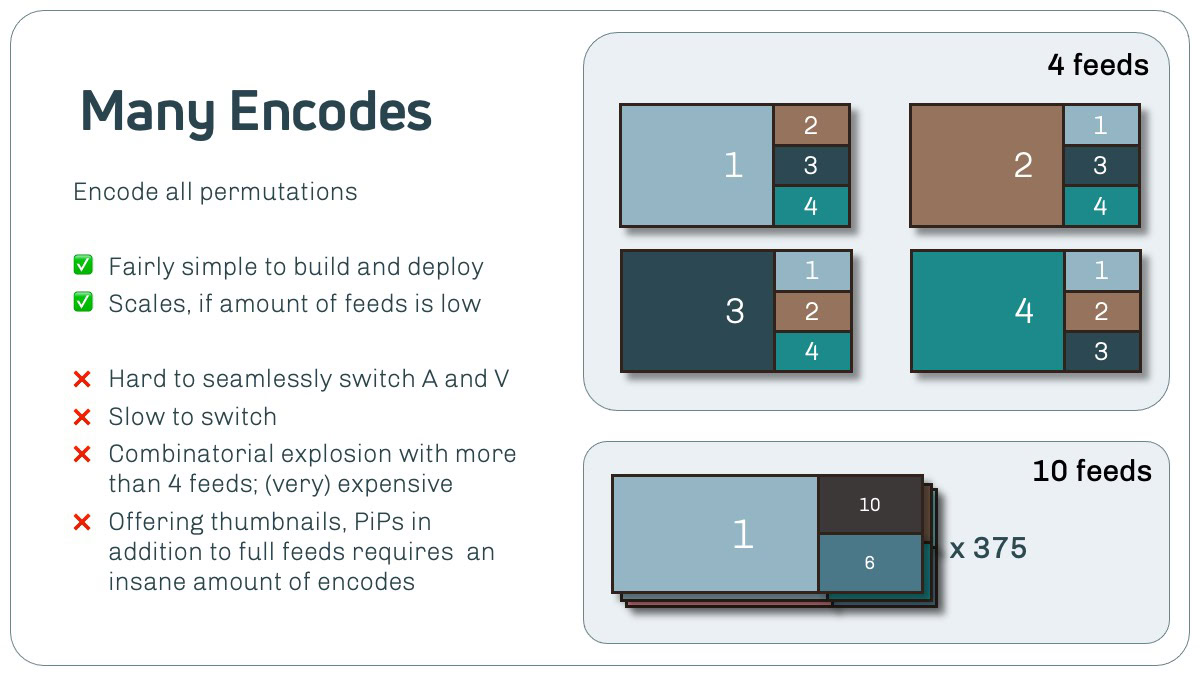

1. Many Encodes

Server-side encoding of multiple layouts is perhaps the simplest way to deliver a multiview experience. This method involves creating several streams with different layouts and allowing viewers to select their preferred layout. It leverages existing infrastructure: regular players and transcoders. This approach is currently used by YouTube, and NBC Universal used it for the Paris Olympics.

This method has significant downsides. Every combination of streams requires a unique transcode. This solution is practical for basic layouts with up to, say, four feeds, such as a 2×2, 1×2, or 3×1 grid. And even that has limitations as has been expressed by YouTube users that complain about the limited options in the NFL multiview offering.

Combinatorial Explosion

But let’s say we want to offer 10 streams in a motorsport race with some layouts. For instance, we want to offer 2×2, 2×1 and 10×1 layouts. Offering all possible combinations requires encoding ABR ladders for 375 different streams. And if we wanted to give full flexibility, where users can arrange streams in any order on screen, that would require close to 6,000 ladders!

This “combinatorial explosion” is not the only issue. Switching between streams can be sluggish because the frame buffer is emptied at every switch to a different layout. Users are likely to see the video stall while the new layout is retrieved. There will also be a brief silence. Or, if we keep the “old” video playing while the new layout is loading in parallel, the switch will take some time to complete and usually results in a timeline discontinuity. This makes the experience feel sluggish and glitchy, and it creates FOMO – the fear of missing out on an important moment in a game. So while server-side encoding offers a relatively easy entry point into multiview, it is not a viable solution for a future-proof, scalable, seamless, enjoyable multiview experience.

(In theory we could omit the distinct transcodes by doing a set of tiled encodes and then having a separate packaging step for every lay-out. But the combinatorial explosion remains – we’ve only moved it from the encoding to the packaging. Or, we could do real-time packaging, which will require a dedicated packager instance per user – hardly a scalable solution either.)

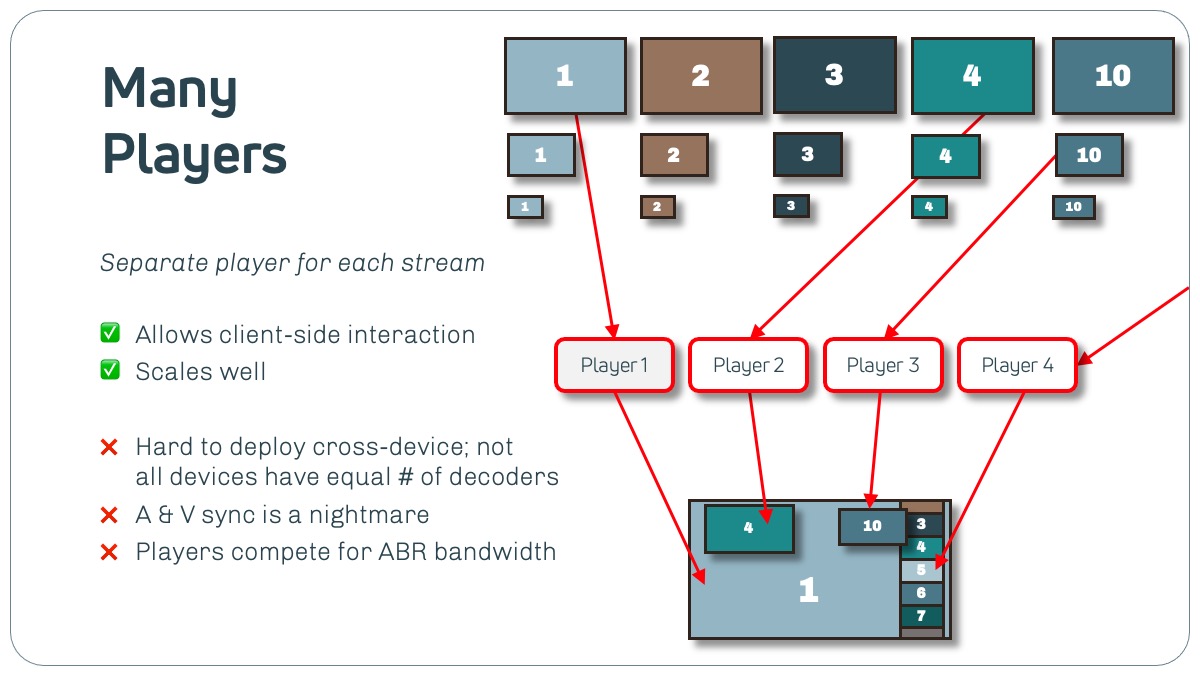

2. Many Players

Another straightforward multiview solution is using a separate player for every feed on the screen. Most platforms support spawning multiple players in parallel, and this allows for the reuse of existing player and transcoding infrastructure. This approach enables flexible client-side interaction and feed positioning. It supports existing ABR ladders, DRM, and DAI mechanisms, so it would seem a natural evolution from conventional, single-feed streaming. Like in the single-stream world, most players will use the platform-native player stack to do the actual content streaming (ExoPlayer for Android, AVPlayer for iOS and MSE for Web).

Multiview offerings of Apple and FuboTV do just this, but it comes with its own set of challenges. It is already difficult enough to control the behaviour of native platform players, let alone several of them running in parallel. Some platforms don’t allow multiple players running in parallel, and when they do, their number varies. Smart TVs and devices connected to TVs are frequently constrained, supporting only one or two players running simultaneously. Some platforms allow time-multiplexing for decoding multiple streams, but certainly not all. And multi-player behaviour has platform-specific quirks, making cross-platform multiview a very tough nut to crack. To add insult to injury, it is virtually impossible to sync multiple feeds between uncorrelated players running in their own sandbox.

Adaptive bitrate and buffer management are particularly hard to get right; please allow me to do a deep-dive to explore this in a bit more detail.

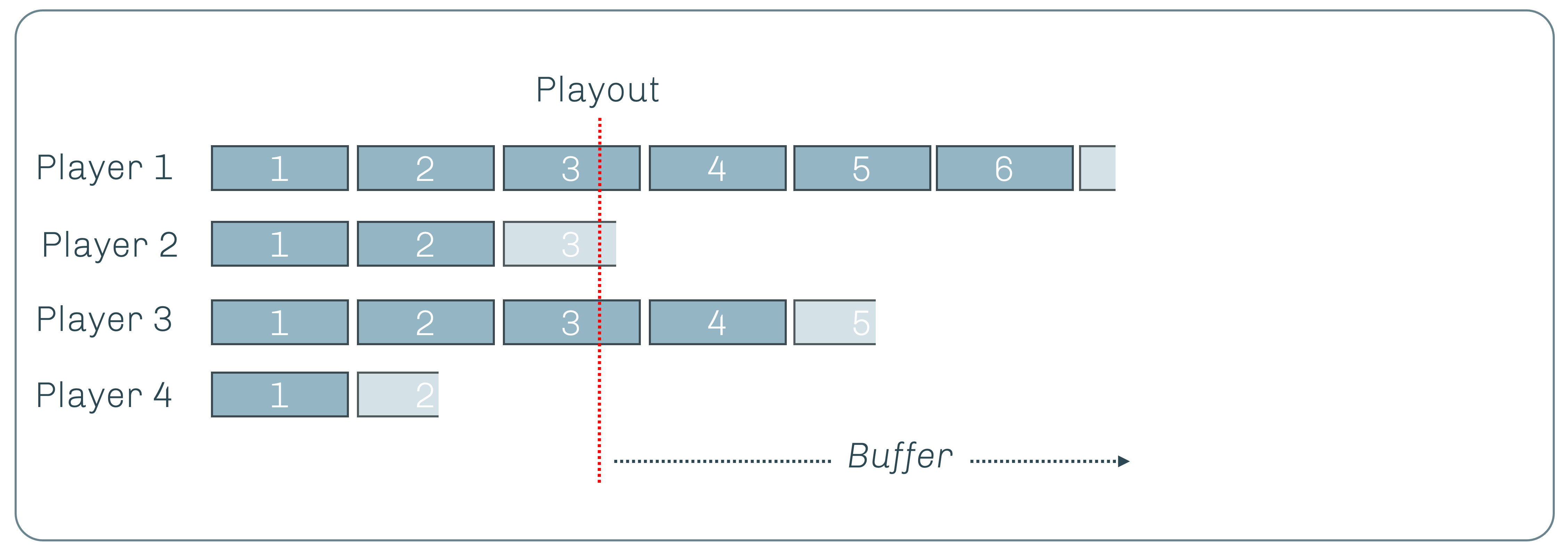

Many player segment management deep-dive

Assume we have a multiview scene with four players running in parallel. Each player, sitting in its own happy little sandbox, downloads segments for its own stream. In the picture below, each rectangle is a segment. All segments have the same content length, say 2 seconds.

Because all players are independent and unaware of each other’s existence, they will run their own segment download strategy and ABR decision engines. Player 1 is not aware of Players 2, 3, and 4, so it will greedily start filling its buffer. In doing so, it will compete for the same bandwidth that Players 2, 3 and 4 are using. Due to the way TCP works, it’s very unlikely for all players to fill their buffers at the same rate. One of them will invariably move faster than the others. But even when Player 1 has enough data to start playing, it won’t stop to give its colleagues extra room to fill their buffers. Instead, the faster Player 1 might start downloading segment 3 while some Player 4 might still struggle to retrieve segment 1.

For end-users, this means that the multiview experience is unnecessarily slow to load. This problem is further exacerbated each time the user adds, removes or switches any of the multiview feeds. A newly spawned new player will be at a disadvantage, starting with an empty buffer, while the other players are blissfully unaware and will continue filling their already pretty full buffers. The poor user will experience even more buffering.

While higher-than-necessary load times are inconvenient, they might not be showstopper by themselves. But they are only the beginning of the problems with this approach. Consider, again, adaptive bitrate strategies. Most video players base their ABR decision logic on a combination of buffer size and download rates. They calculate download rates by simply timing the download of a segment of a given size. When players are not aware of each other’s existence, this fails miserably. A player might download a segment at the same time as another player, and conclude that the effective available bandwidth is half the actually available bandwidth. A few moments later, it might be downloading a segment when the other players are not, and it will think available bandwidth has just doubled. A little later still all 4 players may be retrieving segments at the same time, and our player 1 will draw the conclusion that bandwidth has dropped by a factor of 4. The result is four unstable and untrustworthy ABR engines, causing unnecessary buffering for the user as well as lots of annoying quality fluctuations.

This is not just theory. We created a simple test app that shows this behaviour when spawning 4 AVPlayers in iPadOS. Each player streams a 60 fps clock that counts seconds (large number) and frames (“xx/60”). The picture below provides a screenshot. In the 4-player solution, there is no simple coordination in the playback timing, and there is no coordination in the segment retrieval strategies either.

We also made a short screen capture video that shows two runs side-by-side: our multi-stream player playing the four clock streams, and an iOS 4-player solution. When we start playing, the AVPlayer clocks rapidly get out of sync, even when bandwidth is more than enough to stream all of them at high quality without buffering, as was the case in this video. (If you watch it, pause a few times to better see what’s going on.) That video is here.

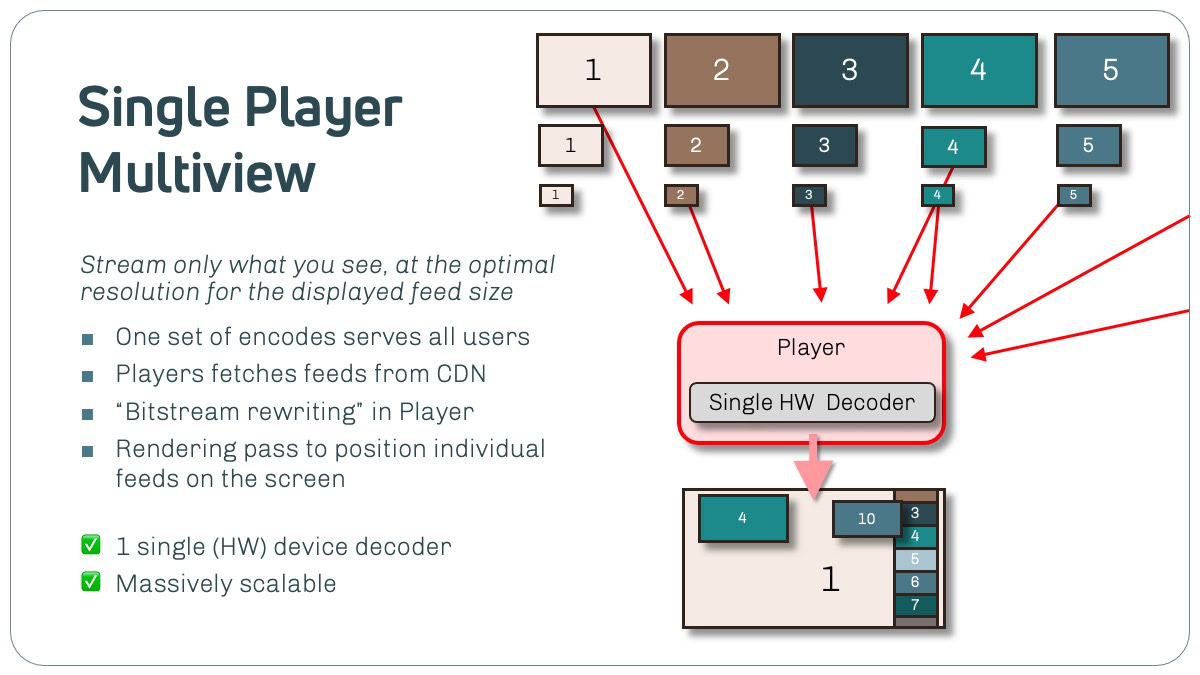

3. Single Player Multiview

The last approach to doing multiview, IMHO far superior to the other two, is using a single, multi-stream player. In its most advanced form, this method relies on streams encoded in a tiled fashion. This is a bit like how 4K content is often encoded as four HD tiles by 4 separate encoders, and then streamed and displayed as a single feed on the screen. There is one difference though: in the 4K case, there is only one stream with 4 tiles. In tiled multiview streaming, the player retrieves individual feeds as required (could be 4, but also less or more) and then the multi-stream player merges these bitstreams into a single bitstream before giving it to the decoder – the hardware device decoder.

As I noted in the TL;DR part of the introduction, the multi-player solution has unfair advantages in efficiency, switching speed, ABR, synchronization, and more. It is also the most scalable solution of the three. It has full knowledge of everything that is happening on the screen, and it can adapt its ABR retrieval strategy accordingly. It knows which video segments to fetch, at what resolution and quality. If bandwidth goes down, it can make an intelligent decision, across all visible feeds, about where to spend bits. It also has full control over the decoding and rendering chain, so it can guarantee frame-level sync between multiple feeds.

The Tiledmedia Player implements these mechanisms. Since platform-native players don’t provide any of this, we built – were forced to build – our multi-stream player engine from the ground up.

As explained in my previous blog, Tiledmedia started out by cracking the viewport-adaptive VR streaming problem. We opted to solve it with “tiled streaming”, and we had no other choice than to build a multi-stream player from scratch. In tiled VR streaming, there are easily 25 separate streams at any given time, playing out in lock-step sync. We need to be able to switch these in and out lighting-fast when a headset user moves their head. This required building a network interface, segment retrieval engine, and ABR logic that were all multi-stream at their core, and super-tightly coordinated. In VR, even one missing segment means a blurry hole in the headset video – not good. This multi-stream video engine now also forms the heart of our multiview solution.

While our multi-stream player contains very advanced stream management logic, it relies on standard streaming formats and simple http streaming. That makes our Single Player Multiview approach compatible, out of the box, with DRM, ad insertion solutions (server-side and client-side), quality metrics and error reporting frameworks, and other client-side tooling. It can be used with existing transcoding chains, where a few straightforward encoder settings unlock the most advanced form of multiview with frame-accurate cross-feed sync. There are no special requirements on the CDN; what works for conventional http streaming also works for multiview steaming.

What I describe above is the most advanced form of single-player multiview. In some environments, like in web browsers, there are benefits to using a single-player, multi-decoder approach. The way we implemented this we can guarantee perfect sync and we get the full benefits from a single ABR engine. This approach also allows mixing and matching frame rates, picture sizes and even codecs!

Further Single-Player Advantages

While I focused on streaming behaviour in this blog, using a player that is natively multi-steam has other significant benefits over bolting multiview onto a single-stream solution. Here are a few of these advantages:

- Unlimited Stream Views: There is no device-imposed limit on the amount of feeds that can be displayed simultaneously. Multiview is possible even on devices that only support a single decoder

- Full Layout Freedom: Both app designers and individual users can have complete control over the layout, where the app designer can decide what options are presented to the user.

- Instant Switching with Continuous Audio: Because all feeds are handled by a single player, there is seamless stream switching without any audio interruptions, drops or discontinuities.

- Frame-Accurate Synchronization: Streams always maintain frame-accurate sync.

- Personalization and Scalability: the number of feeds shown in a multiview experience is only limited by the user interface and what makes sense to display at the same time in an app. Theoretically, the decoder capacity and available bandwidth play a role as well, but in practice the UI limits kick in before hitting any of these limits. Modern devices can easily handle 25+ streams.

- Bandwidth Efficiency: a multi-stream player will only retrieve the steams that are visible, and only in the resolution that is required for the size that is on the display. Other solutions sometimes retrieve 4 HD stream for a 4-feed multiview, regardless of the size on the display.

- Optimised ABR: there is a single ABR engine that has full knowledge of the network and what is visible on the screen. The ABR engine can make trade offs across all feeds and their buffers, ensuring the most efficient retrieval and the least amount of buffering.

- Universal Compatibility: A single set of ABR ladders serves all users and devices across all platforms. Note that all individual feeds are encoded as regular HLS ladders that will normally play in any player. The encoding needs to be done with a few simple settings to enable the magic multiview merging in the player.

Building a player from scratch and owning & controlling 100% of the code offers more benefits, for multiview and conventional streaming alike. Again, you can read about those in my previous blog.

I’m obviously rooting for the home team so please make up your own mind. Play around with the Tiledmedia Demo Player, which you can find it in Apple’s App Store and Google’s Play Store. I’d love to get your feedback! Drop me a note at ray at tiledmedia dot com, or leave a message here.

August 29, 2024

Tech

Blogs

Author

Ray van Brandenburg

Stay tuned!