The Sometimes Suprising Upsides of Building a Player From Scratch

When Tiledmedia’s CTO Ray van Brandenburg began exploring tiled streaming in 2010, conventional streaming wasn’t exactly top of his mind. But today, after a few detours in the Tiledmedia journey, it is his bread and butter – with a few surprise findings.

In his deep-dive blog post, Ray reflects on how the pursuit of complex use cases led to the creation of the Tiledmedia Player, arguably the world’s most advanced player. It doesn’t only support super-advanced VR streaming and extremely flexible multiview, it’s also a very robust audiovisual player.

Ray explains how solving hard problems for VR and Multiview streaming helped Tiledmedia build a reliable player for any streaming use case.

Solving Hard Problems

When we started Tiledmedia in 2017 building on 7 years of R&D in research institute TNO, we had a solid understanding of tiled streaming. We had also worked on tightly syncing multiple video players for second screen use cases. Personally, I had worked on CDN optimisation and interoperability within the IETF, editing several IETF specs. We also understood the ins and outs of the HEVC codec, and especially how to use HEVC tiled coding to our advantage.

It’s that unique combination of video and network expertise that enabled us to tackle advanced VR streaming and develop our ClearVR solution, which remains unparalleled even today.

Tiled streaming for “viewport-adaptive VR” is a hard problem. You want to stream only the pixels that are in the viewport. But for a great user experience, you need to be ultra-responsive to changes the viewport as the user looks around. Say you’re in a headset, sitting in the middle of an 8K VR360 sphere watching a football match. To stream this efficiently, we create a low-resolution background video that covers the full sphere and a bunch of high resolution foreground tiles. We divide the sphere into about 100 tiles, and the background also consists of a few tiles. Our player only retrieves the foreground tiles that are in view, while we always stream the background.

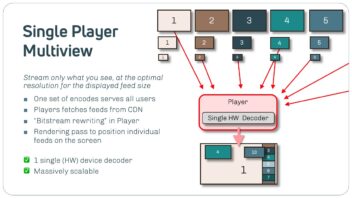

At any given moment, some 20 of these tiles need to be fetched from the CDN. Remember we only use a single decoder, the hardware decoder in the device – usually a headset or a mobile device. To be able to use a single hardware decoder, we merge all these tiles into a single bitstream before dispatching it to that decoder. We don’t want the user to ever see seams between the tiles, and we don’t want to show them low resolution background video either.

This means we need to juggle quite a few things:

- All tiles need to be exactly in sync when we fuse them together: the background tiles and all visible foreground tiles;

- All tiles have individual buffers, and we don’t want one tile’s buffer to run empty while another buffer still has 2 seconds to go;

- When the user moves their head, we need to do a lightning-fast retrieval of the high-resolution foreground tiles that cover the part of the sphere that has just come into view;

- On top of all this, we want to be able to do frame-accurate camera switches while the video continues to play and the audio doesn’t skip a beat.

Tight Control

This juggling act requires incredibly tight control of all network retrieval sessions, the buffers, the bitstream rewriting, and the audio/video playback. And obviously all in real-time, at a beat of 60 or even 90 times per second.

When we switched our attention to multiview, the challenges were basically the same. Again, there are many parallel streams. Again, you need a single engine needed to handle all network requests and buffer management. Again, the streams (cameras in this case) needed to be in exact sync. And again, camera switches had to be seamless, with not even the slightest hint of audio drops or discontinuities.

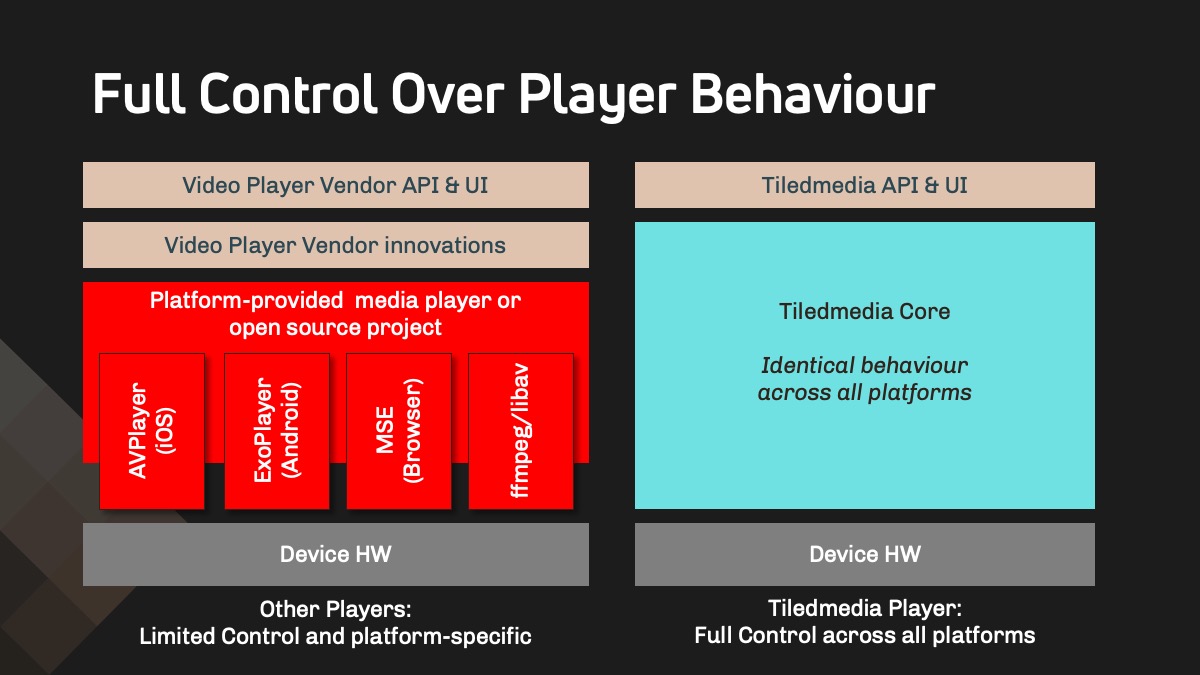

And this brings me to the heart of the argument. We could only ever develop a player to meet these strict requirements if we wrote our own stack, from scratch. And so that’s what we did. There is no way we could ever have had the required control over requests, buffers, and playback if we had tried to create something on top of existing players like AVPlayer or ExoPlayer.

There is, for example, no way to do an efficient and seamless multiview if you have to instantiate and manage six separate AVPlayer instances. The picture below illustrates this.

The Common Core

So, build a player from the ground up we did, and we ported it to mobile devices, headsets, the web, and many living room devices. And we keep adding to this list. Across all of these platforms we have the same player with the same core stack, written in Go. With that field-proven core stack, the switch from VR to multiview was straightforward. Of course we needed to build new interfaces for developers as multiview and VR applications have different API requirements. But we had already done the hard part.

In our work with our multiview customers, we see on a daily basis that controlling the entire stack has numerous advantages—many by design, and some unexpected. When you support multiview, you obviously also support “monoview”, or “single feed streaming”. That’s also where our customers start their integration and testing.

The Advantages Owning the Stack

Let me now sum up ten of these advantages that we see with our customers – and that our customers see as well when they start working with our player, sometimes to their surprise.

- Since we need such tight control over retrieval and playback for VR and multiview, we can also fine-tune our ABR logic, and we already see it is better than the competition in 99% of our tests.

- The same applies to video start-up times and buffering, for which our measurements also show better numbers. We can control this much more tightly than commercial players built on an operating system player like ExoPlayer, or DYI players built on open source.

- Our stack gives less errors than our customers are used to. When they (and we) do see errors, we can tell them exactly what’s happening, and help them fix things. This is a welcome change from getting the dreaded “unknown error” from the underlying player and having no clue where to start. We’ve already seen cases where we onboard new customers and resolve errors have been bugging them for years, just because we can see exactly what’s going on.

- Since the VR use case made it necessary for us to manipulate bitstreams in real-time, we can also switch “normal” videos without having to start a new player instance. This means that video switches and start-up times are much faster for the user.

- Also, our player doesn’t start with a blank “ABR memory”. It already knows the state of the network very well when the user starts playing a new clip or switches to a new camera, and it can select the most appropriate ABR level from the get-go.

- Since it doesn’t use any underlying player, our player can rely on a single encoding and packaging format across all platforms. This saves transcoding cost and complexity, and it has CDN benefits as well: a single format increases the likelihood that content is in the edge cache. In other words: less buffering.

- The single-format advantage extends to things like captioning formats and thumbnail previews. We don’t have a complex thumbnail preview support matrix, simply because we don’t need one. A single format works in our player across all platforms.

- We need to do any third-party integration only once for support across all platforms. And since we control the full stack, these integrations are straightforward. Think ad systems, quality metrics, error reporting, etc. When we integrate these for one of our customers, all customers benefit. Examples are New Relic, Bitmovin Analytics, Yospace, and more. These are all “Integrate Once, Run Anywhere”.

- With our player, it’s easy to provide a consistent UX and look-and-feel across all platforms – we support the same user interface on all platforms.

- We handle things like suspend-resume, seamless stream switching etc. all in our part of the stack. This has allowed customers to dump a lot of complex code that they have needed to maintain in many platform-specific versions. A simple example is e.g. to sync the status of two players during a hand-over.

More Upsides

And I would almost forget: the Tiledmedia Player handles the highest quality VR and the most flexible and scalable multiview. These are standard features of the Tiledmedia Player SDK, so our customers can delight their users with the best streaming and the most advanced use cases with one single player.

I am confident we will find more upsides as we work with more and more customers. And I’m excited to see that our start with highly advanced use cases now allows us to enable to rock-solid “single-feed streaming”. It’s at least as exciting to see our technology finally get into the devices and hands of millions of customers, fulfilling the very reason we started Tiledmedia.

Interested in understanding how we can help you with your streaming challenges? Drop me a note at ray at tiledmedia dot com, or leave your contact details here.

July 5, 2024

Tech

Blogs

Author

Ray van Brandenburg

Stay tuned!